Technologies

High throughput network backbone with NVIDIA SN3700 switch featuring 32x QSFP28 ports supporting a total throughput of up to 6.5 Tb/s. Two of such switches should thus provide enough capability to get us close to the realize 2x 7 Tb/s east-west traffic, the current specifications for SKAO’s SPC to emulate the input of the Science Data Processor.

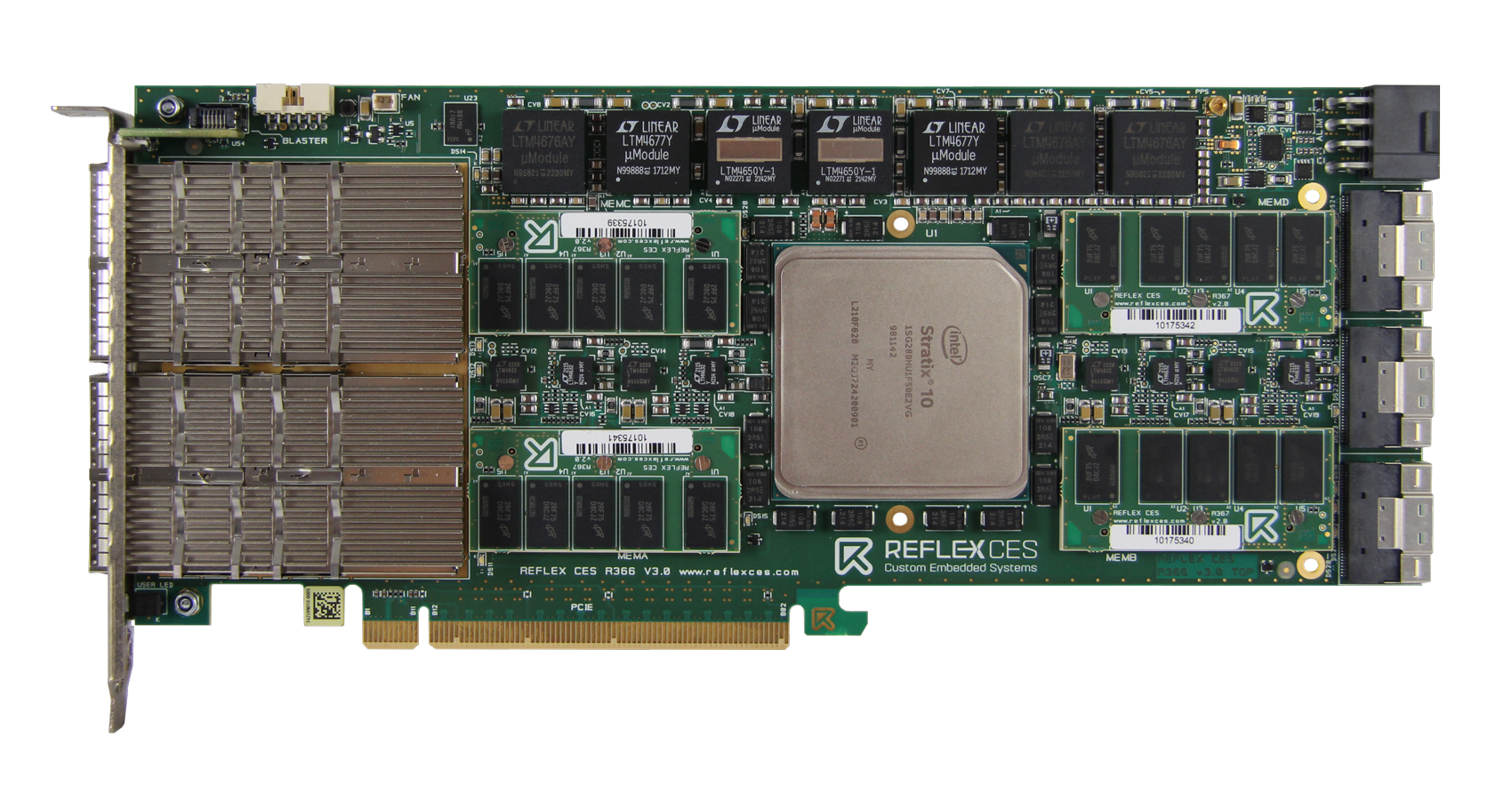

Smart data emulation with Reflex CES FPGA solutions, 800 GbE boards that will be used as a building block for the data emulation sub-system. Using 8 such boards would bring us very close to our initial high-level requirement of 7 Tb/s.

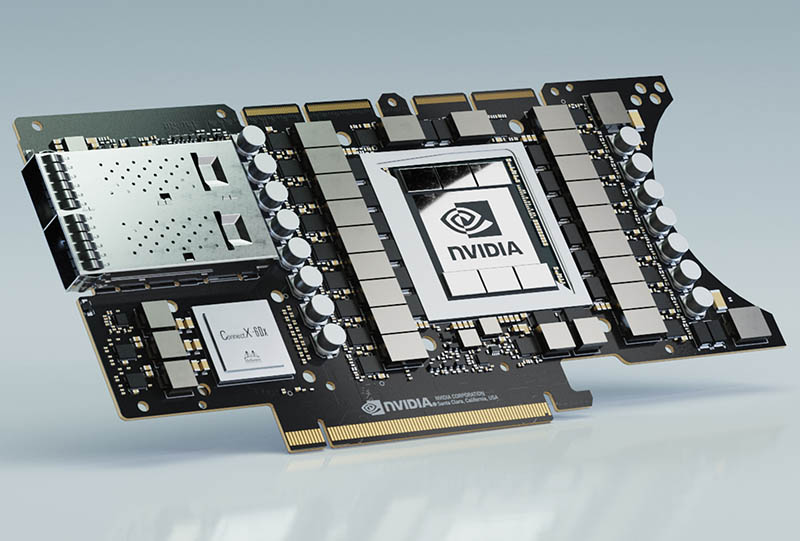

Smart data ingest with NVIDIA A100x DPU, a representative example of a new generation of reprogrammable high-performance processors linked to high-performance network interfaces. This technology will be used to realize a smart interface able to ingest and process / reduce online the large volumes of data from the emulator sub-system.

Energy efficient AI workflows with Graphcore BOW IPU, a new type of processor based on a highly parallel architecture designed to accelerate deep learning applications. They offer very high computing throughput on mixed-precision floating-point data and have a unique memory architecture. This technology will be used not only for deep learning applications but also for more classical workflows and a mix of both with performance and energy efficiency as key indicators.

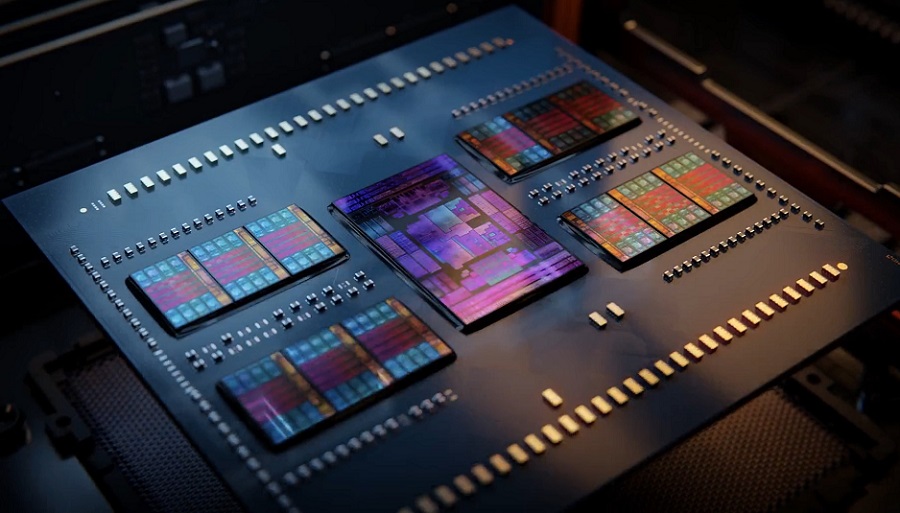

Modular workflow scheduling with AMD Epyc Genoa CPU. With 96 cores and large capacity L3 caches, these high end CPUs will be integrated in most sub-systems of STREAMS to provide the means to orchestrate efficiently a large number of concurrent workloads within the platform, including real-time data emulation, low latency data ingestion and processing and supporting operations within the supervisory node.

High performance real-time computing with AMD Mi210 GPU, offering coherent CPU-To-GPU interconnect, high capacity HBM2e and enhanced Matrix Core Technology. This technology will be assessed in the context of time sensitive data processing within the stream processing system.

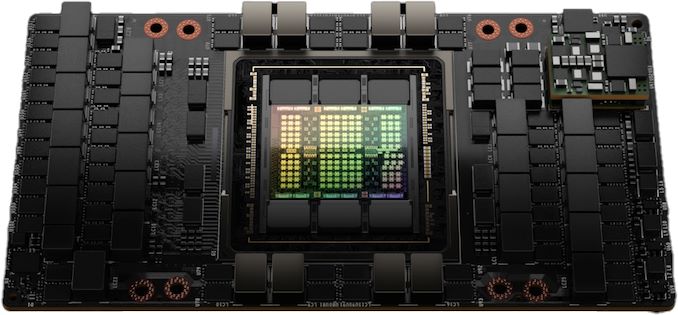

High performance AI training with NVIDIA H100 GPU. Thanks to a tightly integrated high end GPUs cluster (up to 4x in SXM form factor with NVlink interconnect), a dedicated supervisory node will enable online high performance training of neural networks and coupling these training resources efficiently with the time critical pipeline.